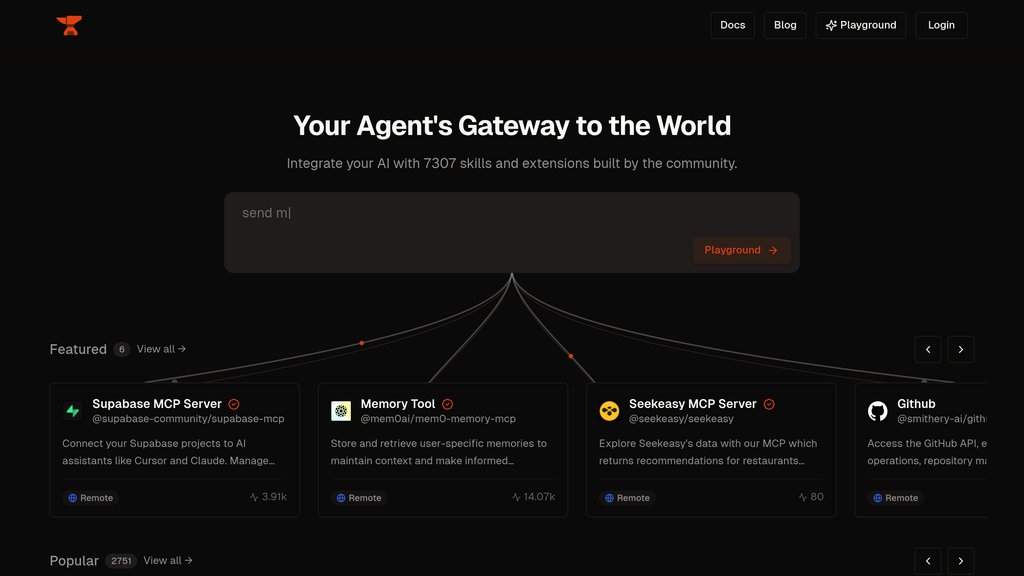

Smithery AI

Platform for AI tool extensions - discover and deploy capabilities

Introduction

What is Smithery AI?

Smithery AI stands as the definitive hub for Model Context Protocol (MCP) servers, granting developers access to a vast collection of over 7,300 pre-built tools and extensions. This platform operates as both a discovery registry and deployment infrastructure for MCP servers. These servers empower language models to interface with external applications, APIs, and services, significantly broadening their functionality. Smithery accommodates both local installations for user-machine execution and cloud-hosted solutions running on its own infrastructure. The platform seamlessly integrates with popular development environments and supports multiple deployment approaches including CLI and Docker.

Key Features

Extensive MCP Registry

Browse and search through more than 7,300 community-developed MCP servers spanning web automation, memory systems, API connections, and development utilities with organized categorization.

Dual Deployment Options

Select between local installations for optimal security and control, or hosted solutions for immediate access without configuration overhead.

Developer-Friendly Tools

Comprehensive command-line interfaces, Docker compatibility, and GitHub integration for streamlined server development, testing, and deployment processes.

Enterprise Security

Temporary configuration handling for sensitive information, local token management alternatives, and production-ready security measures for organizational deployments.

Universal Compatibility

Compatible with leading language model clients such as Claude Desktop, Cursor, and other MCP-supporting applications.

Use Cases

Web Automation: Developers can incorporate browser automation, web data extraction, and API communications into language model workflows for real-time information access.

Development Workflow Enhancement: Engineering teams can link language models to GitHub, VS Code, terminal operations, and other development tools for automated programming support.

Memory and Context Management: Applications needing persistent memory across sessions can leverage specialized memory servers to maintain user context and preferences.

Enterprise Integration: Businesses can connect language models to internal systems, databases, and corporate applications through custom or pre-existing MCP servers.

Research and Analytics: Researchers can enhance language models with search functions, data analysis tools, and specialized domain-specific capabilities.