LM Studio

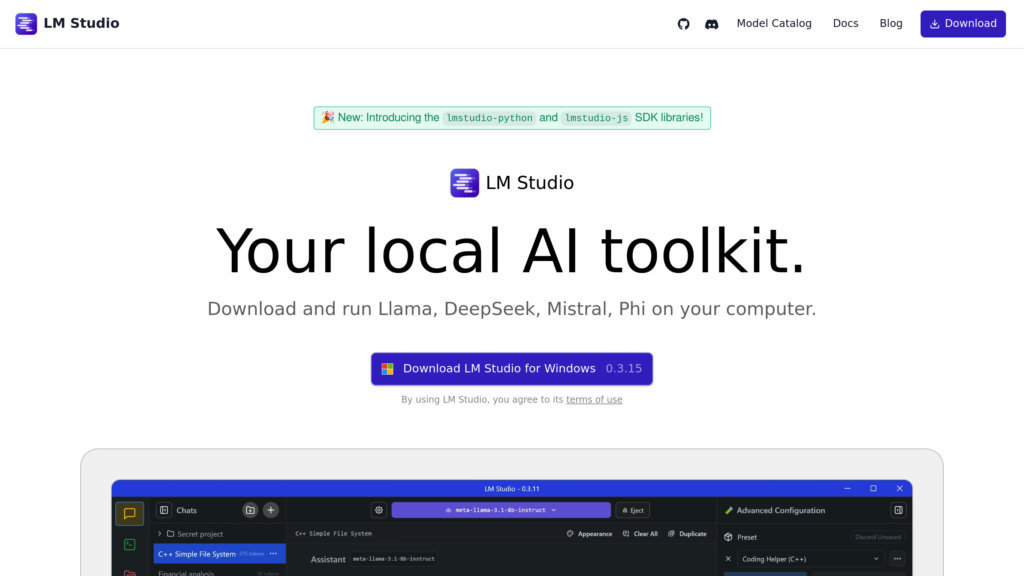

LM Studio: Run large language models offline on your personal computer

Introduction

LM Studio is a flexible desktop application that allows you to operate and test large language models completely on your local hardware, eliminating the need for an internet connection. It is compatible with macOS, Windows, and Linux, utilizing efficient open-source engines like llama.cpp and Apple's MLX for optimal performance. The platform provides access to a vast library of LLMs from Hugging Face repositories, features a user-friendly chat interface similar to ChatGPT, and supports advanced functionalities such as interacting with offline documents using Retrieval Augmented Generation (RAG). Additionally, it includes a local API server that mirrors OpenAI's specifications, facilitating easy connection with bespoke software and automation scripts while maintaining full control over your data and AI processes.

Key Features

Local LLM Execution

Operate sophisticated language models on your own computer without an internet connection, providing absolute data security and uninterrupted offline access.

Model Discovery and Management

Easily search for, acquire, and manage a diverse collection of open-source LLMs directly from Hugging Face repositories within the application's environment.

Chat Interface with Document Interaction

Communicate with AI models through a straightforward chat interface and interrogate your local files using RAG technology for deeper contextual insights.

OpenAI-Compatible API Server

Deploy local models through a REST API that aligns with OpenAI's endpoints, enabling smooth integration with third-party applications and scripting tools.

Cross-Platform Support

Runs seamlessly on macOS (including Apple Silicon chips), Windows (x64/ARM64 architectures), and Linux systems, supporting various execution engines like llama.cpp and MLX.

Advanced Customization and Developer Tools

Equipped with Command Line Interface (CLI) utilities, Software Development Kits (SDKs) such as for Python, a developer mode, and granular controls for adjusting model parameters and workflows.

Use Cases

AI Application Development : Developers can construct and evaluate AI-driven applications by leveraging local models and the compatible API for seamless incorporation.

Privacy-Focused AI Research : Academics and researchers can conduct experiments with LLMs on-premises, ensuring sensitive information never leaves their secure environment.

Offline Document Analysis : Analyze and query personal or confidential documents using AI in a fully isolated, offline setting, ideal for handling secure information.

Custom AI Chatbots : Build internal chatbots that function on proprietary data without relying on external services, boosting security and autonomy.

Model Experimentation and Evaluation : Effortlessly test and compare different open-source language models within a unified platform to identify the optimal solution for specific requirements.